Advanced driver assistance systems — ADAS for short – are here to stay. Moving at an exponential pace of 10Gbps, and more, it lets you know if it’s time to take a break — and if you are out of your lane — all at the same time. But keeping the technology on track will take the professionalism and skill of every technician performing calibration/recalibration in the bay, every day. Why?

Because properly resetting an ADAS sensor is vital to the ongoing programming, sensor development challenges that the technology has — and is — experiencing. There is an intelligent-base that lies behind sensor fusion - like bioptic cameras to advanced ESC-radar. And last year’s sensor development led to items like chip technology allowing radar units to “see” smaller objects, to programming supported by upgraded integrated circuits (IC) that allows quicker communication amongst the sensor fusion.

This year’s ADAS sensor technology debut at the Consumer Electronics Show (CES) was no different. Texas Instruments highlighted its latest semiconductor that allows radar to recognize a greater range, which equates to better, quicker decision-making maneuvers. Meanwhile, Qualcomm-Robert Bosch GmbH teamed up and showcased its contribution to the automotive industry, the first, central vehicle computer combining infotainment and ADAS functions within one security operations center (SoC). It now looks like we have the beginnings of a “mainframe” setup in a vehicle.

With all the sensor developments and software upgrades being created for a better ADAS — moving us from the official Society of Automotive Engineers (SAE) Level 2 to Level 3 and beyond — how do we know what’s going to work or give us a “headache” on that new dealer delivery down the road?

Like all success stories, it starts with training.

First, we need to recognize that there is a lot of machine learning (ML), AKA training, that needs to be performed before we have a bunch of altered 1957 Volkswagen Beetles running around in the coveted autonomous-to-AI/AV (artificial intelligence, autonomous vehicle) transportation mode. I am talking about quadrillions upon quadrillions-plus of ML data before we even think about the vehicle-to-everything (V2x) communication highway allows us to get to the point that the vehicle has been programmed to recognize every variable to every driving situation and determines what is best for that particular maneuver. When all is said and done, AI/AV is software created by ML, mimicking human decision-making to perform driving tasks and learning from each turn of the wheel or pump of the brake.

To do this, we start with a lot of data from models and recognize safety, security and functionality components. Looking at everything from the driving environment to vehicle operations. The data needs to recognize the security of programmed processes, verifying the security – and if it passes this criterion – the degrees of certainty of associated ADAS systems. This process allows the programmer-sensor development team to verify, verify, verify before further data collection from lab-to-roadway operation of equipped cars and light trucks.

This includes analyzing how our new sensor-fusion project relates to the tens of thousands of roadway mediums and conditions – including everybody’s favorite asphalt assault, the pothole – crown and shoulder conditions, swale pitch, navigable areas, speed limits versus the vehicle’s physical restrictions (e.g., turning radius, wheelbase, etc.). Then there’s traffic count, weather conditions…right down to how the vehicle reacts to an emergency vehicle/first responder interaction.

The manufacturers are “watching” and correcting by uploading-downloading course correcting programming. And the Feds are keeping track of the changes, as well. In 2022, the National Highway Safety Traffic Administration (NHTSA) recorded 932 vehicle safety recalls affecting more than 30.8 million cars and light trucks; 23 of those recalls had an over the air (OTA) patch. More training, more dual-twin lab time needed to verify, re-verify and more verification for safety’s sake.

'Primate with a tutu'

The lab is where sensor recognition begins before anything can hit the road. For example: We have a crosswalk (easily recognized) lab-learned, and roadway documented. Now, we have added a bicycle. Another common item learned. Other, various items are catalogued like dogs, cats, primates, bears, deer…you name it. Next, we add something that is extraordinary, yet possible: a chimpanzee wearing a pink tutu pedaling itself across the pedestrian way. Yes. I know. Obscure. But we need to train our system for every possible – and almost impossible – situation. Back to the simian crossing the street. The primate and the tutu were individually catalogued and lab-learned, and the roadway was recognized. But when you combine the two items, this combination-object is not recognized. Will the vehicle stop?

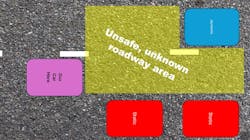

Well, within milliseconds the software is comparing the object type, shape, static or dynamic, the predictable and free space, “talking” with other sensors — like radar, lidar, camera — and “compare notes.” And it doesn’t recognize the object crossing the roadway. The combination hasn’t been documented; hasn’t been learned. The vehicle will have contact with the chimpanzee on the bike. Again, it’s all about ML.

The vehicle has to recognize the dynamic object in its maximum range path. At the same time, the vehicle is noting static objects on the side of the road (e.g., parked cars, garbage cans, etc.) that have been previously “learned” and accepted.

Then, there is the “unsafe” free space in front of the vehicle that surrounds everything in the path of the vehicle beyond the dynamic and static vehicles surrounding the car or light truck, and the planned path right down the center of the unsafe zone. And why do they consider it “unsafe” even though there is no object in its path? Because there is the potential of an object — lab/trained or unrecognized, like the primate on a bike wearing a tutu — to pass in front of its path.

With ADAS development, it’s all about the vehicle’s programmed aptitude, its “view” in front of forward-motion vehicle, checking for any recognized collision points in its path, and reacting upon what’s in the road ahead. This requires training, retraining, and verifying, and more training with any possible situation and condition.

And this training window is tight.

The object data definition (ODD) toward AI/AV is analyzing road types, vehicles, pedestrians, meteorology surrounding the vehicle and communication methods. Dedicated short-range communication (DSRC), 5G/6G-IoT (Internet of Things) for traffic controls to global navigation satellite system (GNSS) is all part of the ML process. And the vehicle needs to learn/mimic driver behavior in every type of driving situation. Sounds easy, doesn’t it? So, we should all be driving AI/AV in the very near future. Right?

Not quite. Let us take a closer look at the statistics.

The average two-lane, four-way stop intersection has a multitude of possible situations. Four vehicles all sitting at the stop sign, with four pedestrians at the crosswalk approach and four bicyclists riding in-lane. Seems like a semi-simple intersection situation, right? Well, those 12 units – at the intersection – have the statistical possibility of more than 131,000 possible outcomes. That’s one, simple intersection. I use this example in my training that one medium-sized township can have approximately 20-plus intersections, like this example, within a two-mile radius. And that can yield more than three (3) million intersection scenarios. Roadbed conditions, agriculture along the median, construction cones, and light pole locations…it all adds-up.

Five steps of sensor learning

There’s a lot of ML to do and the automakers need the technicians’ help.

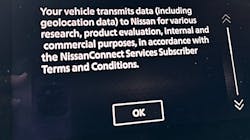

The manufacturers are watching their brands. And with last year’s model year, they let the consumer know that – even though they purchased the car or light truck – the OEM was gathering metrics. And if you didn’t want your connected devices “tapped,” leave them unconnected from the vehicle.

Remember, they are collecting quadrillions upon quadrillions of data points and are consistently uploading and downloading vehicle information, including scenarios like when a turn signal is activated, and the steering angle sensor (SAS) reads beyond zero (0) degrees to how many times a lane departure warning (LDW) has been activated within a single drive sequence. It’s recorded and collected. But if a vehicle is not tracking or calibrated-recalibrated properly, the collected data is skewed.

Tires track on their tread. If there is a deviation to the tread due to an impact separation, poor alignment angles or lack of rotation — you name the situation — the vehicle’s ADAS sensor fusion reaction will be based on false-positive activity. LDW activation based on the driver “fighting” the wheel due to poor tire conditions. The module doesn’t know that there is a tire issue, only that the operator is:

1) Fighting the wheel (SAS)

2) Departing the lane (LDW) without turn signal activation

Therefore, this data – any data – good or bad will be calculated, and mean deviation prevails. More ML is needed to move this “bad” data into outlier status to better concentrate the more accurate data.

Before any vehicle is racked, the technician needs to revisit the OEM site and check for any alignment or calibration/recalibration technical service bulletins (TSB) and recalls. Remember what we said earlier about all the safety recalls reported to NHTSA (932 in 2022)? This includes alignment angles and procedures.

The widely known in-the-bay term “set the toe and let it go” no longer applies. And in the “green” may not be good enough…especially when it comes to the thrust angle on vehicles equipped with sensor fusion. “Zero” is the holy grail. Camber, caster, and toe also need to be within manufacturer specs. Can’t get the numbers in the green by manipulating original equipment for correction? You may have to perform a cradle-shift, OEM/aftermarket camber-caster offset bolts, or may need to have a structural technician recheck measurements to diagnose an out-of-spec frame.

For the last piece of the programming puzzle: In-the-bay ADAS calibration or recalibration. When performed properly – after verification of “same-day” alignment correction via printout – ADAS sensor fusion amendment incorporates manufacturer’s specifications followed by an ADAS-qualified technician to a successful calibration/recalibration. Again, the rules must be followed to arrive at a correct calibration point. This means the process needs to be within a controlled area. Parking lots, busy shops with objects on the wall (belts, hoses, posters/banners) or light entering the area via uncovered windows are not ideal. A clean area with unmarked floors, walls and doors is the best option. And it’s not out of grasp for any ADAS professional; there is plenty of warehouse space for long-term or a temporary rent that fits the bill.

And most of all, remember to check the ADAS tablet and aligner for any updates each morning while making the shop coffee and opening the doors for business.

Why is sensor-fusion programming so dependent upon the tech in the bay?

The reduction to elimination of false positives in the bay will lead to a more robust programming and sensor development, therefore a quicker timeframe to having the autonomous vehicle become the main mode of transportation in our cities and rural roadways.